Novelty Detection in Multispectral Images

Detecting novel features in multispectral images taken with the Mastcam imaging system on the Mars Curiosity rover.

Detecting novel features in multispectral images taken with the Mastcam imaging system on the Mars Curiosity rover.

Detecting changes in surface features, e.g. recurring slope lineae or impact craters, on Mars using images taken by orbital imaging systems.

Detecting high silica and other anomalous geochemistry soils in DAN observations throughout Gale Crater on Mars.

Assessing the quality of compressed images given the scientific context with which the image was acquired for the Mastcam imaging system on the Mars Curiosity rover.

Detecting novel features in multispectral images taken with the Mastcam imaging system on Mars.

Detecting novel features in multispectral images taken with the Mastcam imaging system on Mars.

Computers and Geosciences, 2018

The Mastcam color imaging system on the Mars Science Laboratory Curiosity rover acquires images that are often JPEG compressed before being downlinked to Earth. Depending on the context of the observation, this compression can result in image artifacts that might introduce problems in the scientific interpretation of the data and might require the image to be retransmitted losslessly. We propose to streamline the tedious process of manually analyzing images using context-dependent image quality assessment, a process wherein the context and intent behind the image observation determine the acceptable image quality threshold. We propose a neural network solution for estimating the probability that a Mastcam user would find the quality of a compressed image acceptable for science analysis. We also propose an automatic labeling method that avoids the need for domain experts to label thousands of training examples. We performed multiple experiments to evaluate the ability of our model to assess context-dependent image quality, the efficiency a user might gain when incorporating our model, and the uncertainty of the model given different types of input images. We compare our approach to the state of the art in no-reference image quality assessment. Our model correlates well with the perceptions of scientists assessing context-dependent image quality and could result in significant time savings when included in the current Mastcam image review process.

Recommended citation: Kerner, H. R., Bell III, J. F., Ben Amor, H. (2018). "Context-Dependent Image Quality Assessment of JPEG-Compressed Mars Science Laboratory Mastcam Images using Convolutional Neural Networks." Computers and Geosciences, 118(2018), 109-121, doi:10.1016/j.cageo.2018.06.001.

AAAI Conference on Artificial Intelligence, 2019

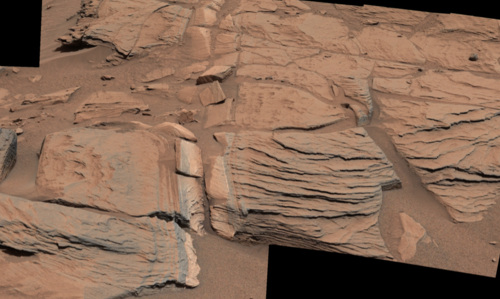

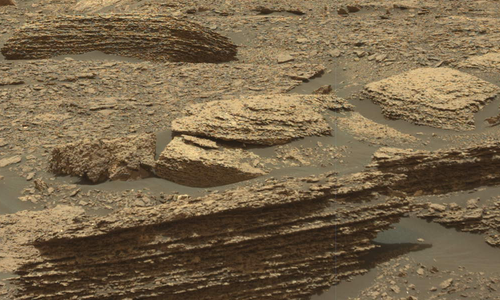

In this work, we present a system based on convolutional autoencoders for detecting novel features in multispectral images. We introduce SAMMIE: Selections based on Autoencoder Modeling of Multispectral Image Expectations. Previous work using autoencoders employed the scalar reconstruction error to classify new images as novel or typical. We show that a spatial-spectral error map can enable both accurate classification of novelty in multispectral images as well as human-comprehensible explanations of the detection. We apply our methodology to the detection of novel geologic features in multispectral images of the Martian surface collected by the Mastcam imaging system on the Mars Science Laboratory Curiosity rover.

Recommended citation: Kerner, H. R., Wellington, D. F., Wagstaff, K. L., Bell III, J. F., Ben Amor, H. (2019). "Novelty Detection for Multispectral Images with Application to Planetary Exploration." In Proceedings of the AAAI Conference on Artificial Intelligence, 33(1), 9484-9491, doi:10.1609/aaai.v33i01.33019484.

Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 2019

Ongoing planetary exploration missions are return- ing large volumes of image data. Identifying surface changes in these images, e.g., new impact craters, is critical for investigating many scientific hypotheses. Traditional approaches to change detection rely on image differencing and manual feature engineering. These methods can be sensitive to irrelevant variations in illumination or image quality and typically require before and after images to be co-registered, which itself is a major challenge. Additionally, most prior change detection studies have been limited to remote sensing images of Earth. We propose a new deep learning approach for binary patch-level change detection involving transfer learning and nonlinear dimensionality reduction using convolutional autoencoders. Our experiments on diverse remote sensing datasets of Mars, the Moon, and Earth show that our methods can detect meaningful changes with high accuracy using a relatively small training dataset despite significant differences in illumination, image quality, imaging sensors, co-registration, and surface properties. We show that the latent representations learned by a convolutional autoencoder yield the most general representations for detecting change across surface feature types, scales, sensors, and planetary bodies.

Recommended citation: Kerner, H. R., Wagstaff, K. L., Bue, B., Gray, P. C., Bell III, J. F., Ben Amor, H. (2019). "Deep Learning Methods Toward Generalized Change Detection on Planetary Surfaces with Convolutional Autoencoders and Transfer Learning." Journal of Selected Topics in Applied Earth Observations and Remote Sensing, 12(10), 3900-3918, doi:10.1109/JSTARS.2019.2936771.

Journal of Geophysical Research Planets, 2020

The Dynamic Albedo of Neutrons (DAN) instrument onboard Mars Science Laboratory (MSL) is the first spacecraft-based active neutron investigation. DAN uses neutron die-away, a standard technique used in active nuclear spectroscopy, to measure the abundance and depth distribution of hydrogen and neutron-absorbing elements in the top $\sim$0.5 m of the Mars subsurface. We present the first comprehensive study of the intrinsic variability in rover-based neutron die-away data using simulated DAN measurements with a range of geochemistries relevant to equatorial and high-latitude environments on Mars. Our analysis shows that for thermal neutron die-away, the total neutron counts exhibit the greatest variance, followed by the timing of neutron arrival. We present an analysis of the sensitivity of these properties for a variety of elemental compositions that might be observed by DAN or future instruments. We found that neutron die-away is most sensitive to variations in H content when the abundances of H, Cl, and Fe are relatively low (e.g., in equatorial regions including Gale crater). When the abundance of H is high (e.g., in poleward regions of Mars or icy bodies), neutron die-away is most sensitive to variations in neutron-absorbing elements (e.g., Fe and Cl). Using this understanding of neutron die-away sensitivities and variability, we performed an outlier analysis of DAN measurements acquired between sols 1-2080. We found that most outliers along the Curiosity traverse correspond with measurements having abnormally low or high bulk neutron absorption cross sections, and interpretations may include high-silica fracture-associated halos and felsic igneous rocks or sediments.

Recommended citation: Kerner, H. R., Hardgrove, C. J., Czarnecki, S., Gabriel, T. S. J., Mitrofanov, I. G., Litvak, M. L., Sanin, A. B., Lisov, D. I. (2020). "Analysis of active neutron measurements from the Mars Science Laboratory Dynamic Albedo of Neutrons instrument: Intrinsic variability, outliers, and implications for future investigations." Journal of Geophysical Research: Planets, 125(5), e2019JE006264.

International Conference on Learning Representations (ICLR) Workshops, 2020

Accurate crop type maps provide critical information for ensuring food security, yet there has been limited research on crop type classification for smallholder agriculture, particularly in sub-Saharan Africa where risk of food insecurity is highest. Publicly-available ground-truth data such as the newly-released training dataset of crop types in Kenya (Radiant MLHub) are catalyzing this research, but it is important to understand the context of when, where, and how these datasets were obtained when evaluating classification performance and using them as a benchmark across methods. In this paper, we provide context for the new western Kenya dataset which was collected during an atypical 2019 main growing season and demonstrate classification accuracy up to 64% for maize and 70% for cassava using k Nearest Neighbors—a fast, interpretable, and scalable method that can serve as a baseline for future work.

Recommended citation: Kerner, H. R., Nakalembe, C., Becker-Reshef, I. (2020). "Field-level crop type classification with k-nearest neighbors: A baseline for a new Kenya smallholder dataset." In Proceedings of the International Conference on Learning Representations (ICLR) Workshops.

Data Mining and Knowledge Discovery, 2020

Science teams for rover-based planetary exploration missions like the Mars Science Laboratory Curiosity rover have limited time for analyzing new data before making decisions about follow-up observations. There is a need for systems that can rapidly and intelligently extract information from planetary instrument datasets and focus attention on the most promising or novel observations. Several novelty detection methods have been explored in prior work for three-channel color images and non-image datasets, but few have considered multispectral or hyperspectral image datasets of for the purpose of scientific discovery. We compared the performance of four novelty detection methods–Reed Xiaoli (RX) detectors, principal component analysis (PCA), autoencoders, and generative adversarial networks (GANs)–and the ability of each method to provide explanatory visualizations to help scientists understand and trust predictions made by the system. We show that pixel-wise RX and autoencoders trained with structural similarity (SSIM) loss can detect morphological novelties that are not detected by PCA, GANs, and mean squared error (MSE) autoencoders, but that these methods are better suited for detecting spectral novelties–i.e., the best method for a given setting depends on the type of novelties that are sought. Additionally, we find that autoencoders provide the most useful explanatory visualizations for enabling users to understand and trust model detections, but that existing GAN approaches to novelty detection may be limited in this respect.

Recommended citation: Kerner, H. R., Wagstaff, K. L., Bue, B. D., Wellington, D. F., Jacob, S., Horton, P., Bell III, J. F., Kwan, C., Ben Amor, H. (2019). "Comparison of novelty detection methods for multispectral images in rover-based planetary exploration missions." Data Mining and Knowledge Discovery, 1-24, doi:10.1007/s10618-020-00697-6.

ACM SIGKDD Conference on Data Mining and Knowledge Discovery (KDD) Workshops, 2020

Spatial information on cropland distribution, often called cropland or crop maps, are critical inputs for a wide range of agriculture and food security analyses and decisions. However, high-resolution cropland maps are not readily available for most countries, especially in regions dominated by smallholder farming (e.g., sub-Saharan Africa). These maps are especially critical in times of crisis when decision makers need to rapidly design and enact agriculture-related policies and mitigation strategies, including providing humanitarian assistance, dispersing targeted aid, or boosting productivity for farmers. A major challenge for developing crop maps is that many regions do not have readily accessible ground truth data on croplands necessary for training and validating predictive models, and field campaigns are not feasible for collecting labels for rapid response. We present a method for rapid mapping of croplands in regions where little to no ground data is available. We present results for this method in Togo, where we delivered a high-resolution (10 m) cropland map in under 10 days to facilitate rapid response to the COVID-19 pandemic by the Togolese government. This demonstrated a successful transition of machine learning applications research to operational rapid response in a real humanitarian crisis. All maps, data, and code are publicly available to enable future research and operational systems in data-sparse regions.

Recommended citation: Kerner, H. R., Tseng, G., Becker-Reshef, I., Barker, B., Munshell, B., Paliyam, M., Hosseini, M. (2020). "Rapid Response Crop Maps in Data Sparse Regions." In Proceedings of the ACM SIGKDD Conference on Data Mining and Knowledge Discovery (KDD) Workshops.

ACM SIGKDD Conference on Data Mining and Knowledge Discovery (KDD) Workshops, 2020

Crop type classification using satellite observations is an important tool for providing insights about planted area and enabling estimates of crop condition and yield, especially within the growing season when uncertainties around these quantities are highest. As the climate changes and extreme weather events become more frequent, these methods must be resilient to changes in domain shifts that may occur, for example, due to shifts in planting timelines. In this work, we present an approach for within-season crop type classification using moderate spatial resolution (30 m) satellite data that addresses domain shift related to planting timelines by normalizing inputs by crop growth stage. We use a neural network leveraging both convolutional and recurrent layers to predict if a pixel contains corn, soybeans, or another crop or land cover type. We evaluated this method for the 2019 growing season in the midwestern US, during which planting was delayed by as much as 1-2 months due to extreme weather that caused record flooding. We show that our approach using growth stage-normalized time series outperforms fixed-date time series, and achieves overall classification accuracy of 85.4% prior to harvest (September-November) and 82.8% by mid-season (July-September).

Recommended citation: Kerner, H., Sahajpal, R., Skakun, S., Becker-Reshef, I., Barker, B., Hosseini, M., Puricelli, E., and Gray, P. (2020). "Resilient In-Season Crop Type Classification in Multispectral Satellite Observations using Growth Stage Normalization." In Proceedings of the ACM SIGKDD Conference on Data Mining and Knowledge Discovery (KDD) Workshops.

International Symposium on Artificial Intelligence, Robotics and Automation in Space (I-SAIRAS), 2020

Current Mars surface exploration is primarily pre-scripted on a day-by-day basis. Mars rovers have a limited ability to autonomously select targets for follow-up study that match pre-defined target signatures. However, when exploring new environments, we are also interested in observations that differ from what previously has been seen. In this work, we develop and evaluate methods for a Mars rover to use novelty to guide the selection of observation targets with the goal of accelerating discovery. In a study comparing three image content representations and five novelty-based ranking methods, we found that the Isolation Forest identified the largest number of novel targets using a combination of intensity and shape features to represent the candidate targets. It was followed closely by the Local RX algorithm using raw pixel features. All algorithms achieved performance well above alternatives such as random selection or selecting the best match to current science objectives, which do not account for novelty.

Recommended citation: Wagstaff, K. L., Francis, R. F., Kerner, H. R., Lu, S., Nerrise, F., Bell III, J. F., Doran, G., and Rebbapragada, U. (2020). "Novelty-driven onboard targeting for Mars rovers." International Symposium on Artificial Intelligence, Robotics and Automation in Space (I-SAIRAS).

Remote Sensing, 2020

On 10 August 2020, a series of intense and fast-moving windstorms known as a derecho caused widespread damage across Iowa’s (the top US corn-producing state) agricultural regions. This severe weather event bent and flattened crops over approximately one-third of the state. Immediate evaluation of the disaster’s impact on agricultural lands, including maps of crop damage, was critical to enabling a rapid response by government agencies, insurance companies, and the agricultural supply chain. Given the very large area impacted by the disaster, satellite imagery stands out as the most efficient means of estimating the disaster impact. In this study, we used time-series of Sentinel-1 data to detect the impacted fields. We developed an in-season crop type map using Harmonized Landsat and Sentinel-2 data to assess the impact on important commodity crops. We intersected a SAR-based damage map with an in-season crop type map to create damaged area maps for corn and soybean fields. In total, we identified 2.59 million acres as damaged by the derecho, consisting of 1.99 million acres of corn and 0.6 million acres of soybean fields. Also, we categorized the impacted fields to three classes of mild impacts, medium impacts and high impacts. In total, 1.087 million acres of corn and 0.206 million acres of soybean were categorized as high impacted fields.

Recommended citation: Hosseini, M., Kerner, H. R., Sahajpal, R., Puricelli, E., Lu, Y. H., Lawal, A. F., Humber, M. L., Mitkish, M., Meyer, S., and Becker-Reshef, I. (2020). "Evaluating the Impact of the 2020 Iowa Derecho on Corn and Soybean Fields Using Synthetic Aperture Radar." Remote Sensing, 12(23), 3878.

Neural Information Processing Systems (NeurIPS) Workshops, 2020

Spatial information about where crops are being grown, known as cropland maps, are critical inputs for analyses and decision-making related to food security and climate change. Despite a widespread need for readily-updated annual and inseason cropland maps at the management (field) scale, these maps are unavailable for most regions at risk of food insecurity. This is largely due to lack of in-situ labels for training and validating machine learning classifiers. Previously, we developed a method for binary classification of cropland that learns from sparse local labels and abundant global labels using a multi-headed LSTM and timeseries multispectral satellite inputs over one year. In this work, we present a new method that uses an autoregressive LSTM to classify cropland during the growing season (i.e., partially-observed time series). We used these methods to produce publicly-available 10m-resolution cropland maps in Kenya for the 2019-2020 and 2020-2021 growing seasons. These are the highest-resolution and most recent cropland maps publicly available for Kenya. These methods and associated maps are critical for scientific studies and decision-making at the intersection of food security and climate change.

Recommended citation: Tseng, G., Kerner, H., Nakalembe, C., and Becker-Reshef, I. (2020). "Annual and in-season mapping of cropland at field scale with sparse labels." Neural Information Processing Systems (NeurIPS) 2020 Workshops (Tackling Climate Change with Machine Learning workshop.

IEEE Aerospace Conference, 2021

While innovations in scientific instrumentation have pushed the boundaries of Mars rover mission capabilities, the increase in data complexity has pressured Mars Science Laboratory (MSL) and future Mars rover operations staff to quickly analyze complex data sets to meet progressively shorter tactical and strategic planning timelines. MSLWEB is an internal data tracking tool used by operations staff to perform first pass analysis on MSL image sequences, a series of products taken by the Mast camera, Mastcam. Mastcam consists of a pair of 400-1000 nm wavelength cameras on MSL’s Remote Sensing Mast that, among other functions, uses a filter wheel to produce multispectral images by creating a sequence of products at different wavelengths. Mastcam’s multiband multispectral image sequences require more complex analysis compared to standard 3-band RGB images. Typically, these are analyzed by the inspection of false color images created to aid visualization, such as band ratios between different spectral indices that can highlight specific potential mineralogic differences among iron-bearing phases, and decorrelation stretches to enhance the color differences between multiple filters. Given the short time frame of tactical planning in which downlinked images might need to be analyzed (within 5-10 hours before the next uplink), there exists a need to triage analysis time to focus on the most important sequences and parts of a sequence. We address this need by creating products for MSLWEB that use novelty detection to help operations staff identify unusual data that might be diagnostic of new or atypical compositions or mineralogies detected within an imaging scene. This was achieved in two ways: 1) by creating products for each sequence to identify novel regions in the image, and 2) by assigning multispectral sequences a sortable novelty score. These new products provide colorized heat maps of inferred novelty that operations staff can use to rapidly review downlinked data and focus their efforts on analyzing potentially new kinds of diagnostic multispectral signatures. This approach has the potential to guide scientists to new discoveries by quickly drawing their attention to often subtle variations not detectable with simple color composites. The products developed in this work have shown promising benefits for integration into mission operations by potentially decreasing tactical operations planning time through guided triage.

Recommended citation: Horton, P., Kerner, H., Jacobs, S., Cisneros, E., Wagstaff, K. L., and Bell III, J. F. (2021). "Integrating Novelty Detection Capabilities with MSL Mastcam Operations to Enhance Data Analysis." IEEE Aerospace Conference.

Published:

This is a description of your talk, which is a markdown files that can be all markdown-ified like any other post. Yay markdown!

Published:

This is a description of your conference proceedings talk, note the different field in type. You can put anything in this field.